Synchronization-inspired Interpretable Neural Networks

Synchronization-inspired Interpretable Neural Networks

Abstract

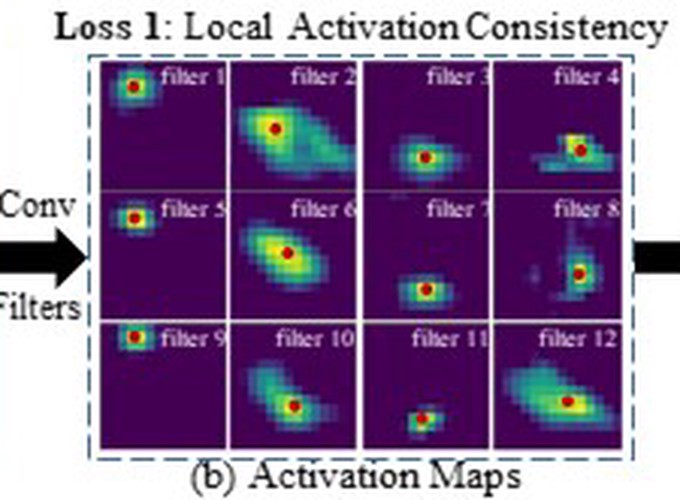

Synchronization is a ubiquitous phenomenon in nature that enables the orderly presentation of information. In the human brain, for instance, functional modules such as the visual, motor, and language cortices form through neuronal synchronization. Inspired by biological brains and previous neuroscience studies, we propose an interpretable neural network incorporating a synchronization mechanism. The basic idea is to constrain each neuron, such as a convolution filter, to capture a single semantic pattern while synchronizing similar neurons to facilitate the formation of interpretable functional modules. Specifically, we regularize the activation map of a neuron to surround its focus position of the activated pattern in a sample. Moreover, neurons locally interact with each other, and similar ones are synchronized together during the training phase adaptively. Such local aggregation preserves the globally distributed representation nature of the neural network model, enabling a reasonably interpretable representation. To analyze the neuron interpretability comprehensively, we introduce a series of novel evaluation metrics from multiple aspects. Qualitative and quantitative experiments demonstrate that the proposed method outperforms many state-of-the-art algorithms in terms of interpretability. The resulting synchronized functional modules show module consistency across data and semantic specificity within modules.